High-performance computing: supercomputers driving innovation

The ‘Noctua 2’ and ‘Otus’ supercomputer form the heart of Paderborn University’s high-performance computing centre. Researchers across the country use the computing capacity of high-performance computer systems to run computer simulations and conduct scientific enquiry at the highest level. High-performance computing (HPC) is used for a variety of disciplines including quantum, climate and pharmaceutical research.

Latest update: At the ISC in Hamburg, the international trade fair for high performance computing (HPC), artificial intelligence, data analytics and quantum computing, “Otus” made it to number 5 of the so-called “Green 500”, the list of the world's most efficient computer systems. The “Green 500” and “Top 500” rankings are regarded as top indicators in scientific and IT circles. While the “Top 500” is exclusively concerned with speed, the “Green 500” ranks speed in relation to electrical power consumption. This results in a measure of energy efficiency.

Computational sciences

Observing nature, conducting experiments, lots of theory: before the first computer was invented, this is how researchers had to work to understand specific processes, operations and developments. State-of-the-art computer programs now also give scientists the ability to create precise simulations and models that are closer and closer to reality. The benefits of computational science are undeniable: experiments that would once have been extremely laborious, expensive, dangerous or simply impossible can be simulated on a computer. In addition, simulations can be used to predict future developments, for example regarding climate change. Across various scenarios, calculations are determined to ascertain: What changes if our average temperature increases by three degrees Celsius? What about an increase of just two degrees? Other benefits are perfect reproducibility and the fact that the results produced by computers can provide new explanations for why particular things happen. Researchers with supercomputers are able to trawl through huge quantities of data within a short period of time and identify patterns.

High-performance computing as a key technology for numerous scientific disciplines

A total of 140,000 compact cores in the ‘Noctua 2’ supercomputer in Paderborn have been working on highly complex tasks since the beginning of 2022. ‘Otus’, which will be launched in the third quarter of 2025, even has twice the computing power of the previous Noctua system. It offers 142,656 processor cores, 108 GPUs, AMD processors of the current “Turin” generation and an IBM Spectrum Scale file system with five petabytes of storage capacity. The computers are operated by the Paderborn Center for Parallel Computing PC2, an interdisciplinary research institute within the university. Its skills lie in calculating atomistic simulations, computational physics and optoelectronics. Various computer programs have also been developed and put to use in Paderborn for this purpose.

A supercomputer is like a time machine, as researchers can use it to calculate something that would take ten years with conventional computers. They can then take efficient advantage of this head start to devote their efforts to topics relevant to our society – such as sustainable energy technology. For example, researchers can use ‘Noctua 2’ and ‘Otus’ like a microscope to examine things more closely – with the difference being that they can view individual atoms and their interactions, i.e. even better than any microscope in any laboratory. And the whole thing moves extremely quickly: numerous simulations can be run within a very short period of time, enabling millions of structures to be tested. This deeper insight into processes helps us understand how particular chemical reactions operate on an atomic level, for example.

Good to know: key terms and the history of the supercomputer

Conventional personal computers undertake ‘simple’ calculations. Supercomputers work in ‘parallel’, as the tasks they must complete are extremely complex. Parallel structures are available for this, each looking after an individual work package. Parallel computing can be compared to passenger transport: in this analogy, vehicles represent the processors. In the past, there was a single car that always drove from A to B to transport passengers. Eventually, buses began to be used as they had space for more people, but they were still just travelling from A to B. However, today there are so many people wanting to travel the route that multiple buses and even trains are deployed at once. Similarly, a supercomputer has numerous processors working in parallel, enabling them to achieve much more than they could with a single processor. The biggest challenge for researchers wanting to use a parallel computer such as ‘Noctua 2’ or ‘Otus’ for their work is to consider which parts of the query can be calculated in parallel, i.e. separately.

The development of the computer has been extremely dynamic and exponential: whether mainframes or smartphones, in two years’ time devices will be twice as quick as they are now. One of the first commercially operated supercomputers was called ‘Cray 2’ and was developed in 1985. Its computing power was about the same as an Apple iPad 2. Six years later, massively parallel computing technology was used for the first time in the ‘Connection Machine MC-5’. There have been countless further developments since then. The world’s largest supercomputer (as of June 2023) is currently in the USA: ‘Frontier’ is the most high-performance computer in the world. Supercomputers are now primarily used in the fields of science and engineering, but are not yet so commonly employed in the humanities or social sciences.

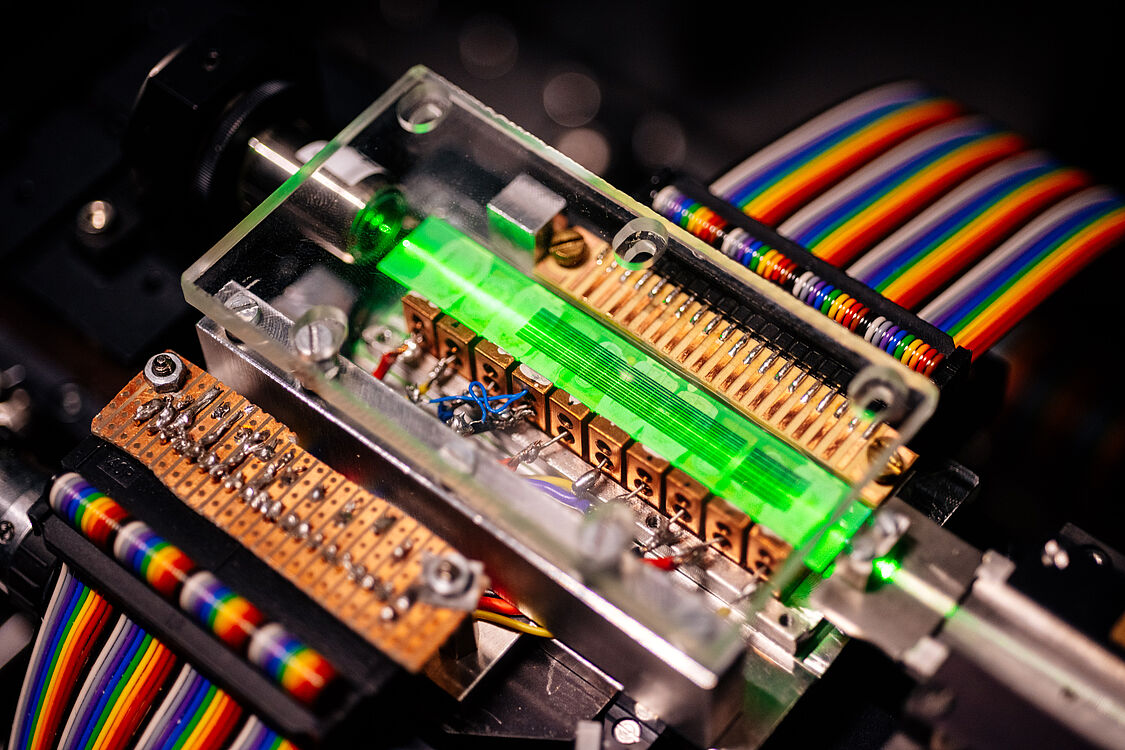

‘Field programmable gate arrays’ (FPGAs) are fully specialised computer units, interconnection network units and storage devices that enable massively parallel computing with thousands of simultaneous operations. Paderborn’s computer scientists are world leaders in this field: they are examining how programming FPGAs could be simplified, how customisable they are, and whether there are alternative processes available.

Sustainable energy systems

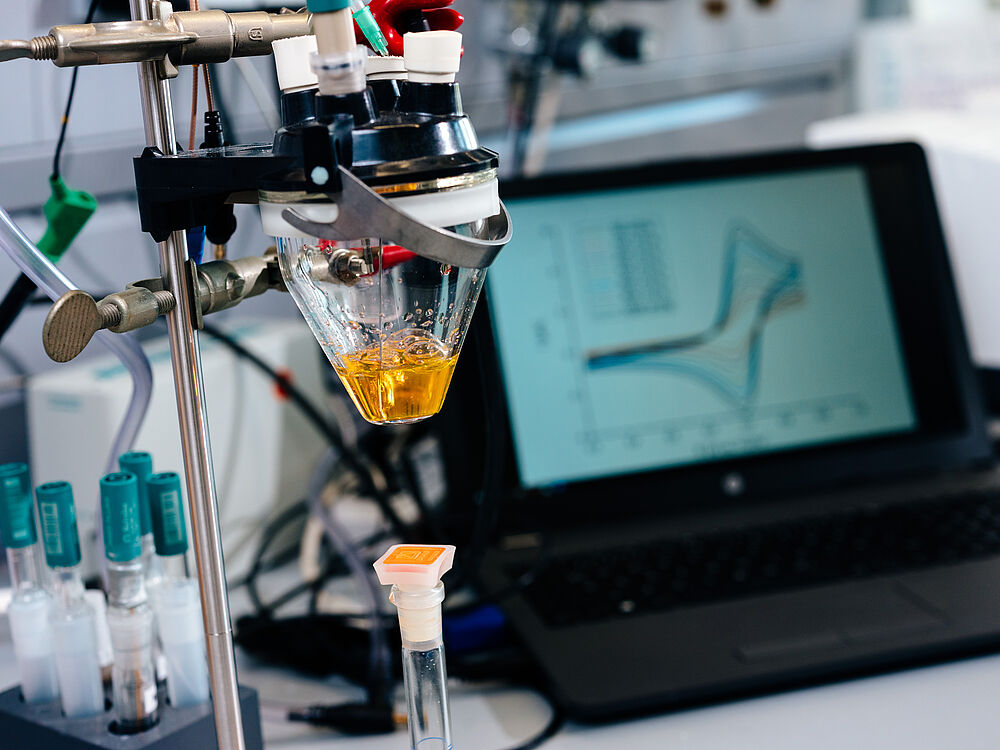

The questions being tackled by Paderborn’s supercomputers are wide-ranging. This research focuses on areas such as sustainable energy conversion by solar cells, quantum systems, and climate-friendly water splitting to produce hydrogen. In photocatalytic water splitting, the energy from sunlight is used to obtain hydrogen – a key building block for the energy revolution. Catalysts can be used in this process to speed it up, make it more efficient and obtain more hydrogen. But which catalyst is the best? Using the computer programs developed in Paderborn, researchers are firstly seeking to observe whether certain catalysts work more or less effectively, and secondly hoping to find new connections that they can then manufacture in a laboratory themselves and that will be more efficient than the catalysts already in use. Another example is tandem solar cells, whose individual layers can efficiently convert the energy from sunlight and are also translucent, enabling light to reach the second and in some cases even the third layer and be put to use. The researchers are thus seeking to increase the efficiency of cells. Using ‘Noctua 1’, the predecessor to the current supercomputer, Paderborn University’s researchers were able to propose various materials for tandem solar cells with an efficiency rate of 23 percent.

Paderborn is a national high-performance computing centre

Many universities and non-university research institutes in German have local computing centres to meet their own needs. These are often funded by the in-house budget or the German Research Foundation. In addition, there are national high-performance computing centres in the NHR Alliance, currently totalling nine (as of December 2023), which also includes PC2. These make their supercomputers available to users from universities across the whole of Germany.

‘With “Noctua 2”, we have entered an entirely new dimension – we are now one of the top ten academic computing centres in Germany’, as Professor Christian Plessl, computer scientist and chair of the PC2 board, delightedly explains. Only the three national high-performance computing centres making up the Gauss Centre for Supercomputing consortium are larger.

The X building newly constructed at Paderborn University for ‘Noctua 2’ was designed to offer twice as much space as is taken up by the computer. The cooling and fire protection concepts, power supply, and office spaces are also conceived to enable expansion in stages. Sustainability played a major role in the building's construction: the electricity for ‘Noctua 2’ and ‘Otus’ comes entirely from renewable generation and is thus CO2-free. The hot-water cooling is extremely efficient and the waste heat is used to heat this and other buildings. The cooling units are all free of chlorofluorocarbons, which were previously often used as a cooling agent but are damaging to the ozone layer.

Computer systems research in Paderborn

The Paderborn Center for Parallel Computing is rooted in theoretical computer science and has continued to develop over the past 30 years. PC2 firstly stands as a service provider for various users, as it offers consulting and services in the field of high performance computing. Secondly, PC2 also sets standards for computer system research by studying particularly efficient hardware accelerator technologies. For example, Paderborn’s researchers recently became the first group in the world to successfully break through the computational power barrier of an ‘exaflop’ – more than a trillion floating-point operations per second – for use in the computational sciences. This set a new world record. Their expertise is also put to use in countless research projects at home and abroad.

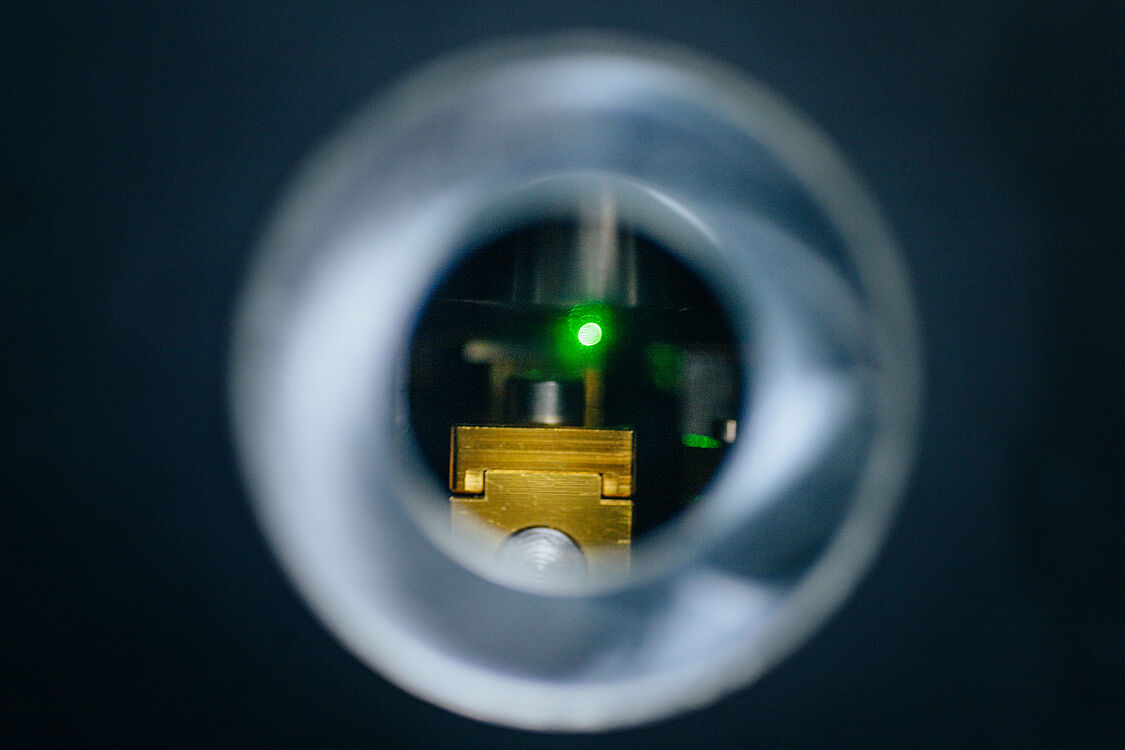

Paderborn University is a world leader in the field of FPGAs. By way of explanation, the processors in computers are the tools that are used to handle tasks. There are specific and unspecific processors, namely ‘central processing units’, or CPUs for short. These can be set up as an entire toolbox (unspecific) or a spanner (specific). There are tasks that only require a spanner, making it less efficient to take the entire toolbox. Corresponding CPUs are used based on requirements. And then there are user-dependent tasks that require hardware components to be specially programmed. To stick to the analogy, these could be a combination of a spanner and tweezers. These are FPGAs, or ‘field programmable gate arrays’. FPGAs are fully specialised computer units, interconnection network units and storage devices that enable massively parallel computing with thousands of simultaneous operations. Paderborn’s computer scientists are world leaders in this field: they are examining how programming FPGAs could be simplified, how customisable they are, and whether there are alternative processes available. ‘We have an FPGA installation that is unique in Europe. This enables the computing unit to be fully specialised for the relevant task. We view this as an extremely promising technology for future computing systems’, Plessl summarises. ‘Noctua 2’ and and ‘Otus’ include CPUs, GPUs (graphics processing units) and FPGAs: high-performance computers that not only undertake fundamental research but are also used for hugely application-oriented tasks – in other words, supercomputer.

HPC and quantum computers

Simulations allow deep insights into complex systems and are often crucial for scientific breakthroughs. In order to fully utilise the potential of modern computing systems, Paderborn scientists want to establish a hybrid model: They are combining quantum computing and Simulations offer in-depth insights into complex systems and are often key to scientific breakthroughs. To comprehensively tap the full potential of modern computing systems, Paderborn’s researchers are seeking to establish a hybrid model that combines quantum computing and supercomputing, i.e. HPC. As information processing happens in parallel, tasks can be completed more quickly. Parallel structures are available for this, each looking after an individual work package. High-performance computing, like quantum computing, is used to research the physical fundamentals of quantum states. Quantum states are also used to enable faster and faster computing. However, this research is in its infancy. With Noctua, Otus and the expertise of individuals such as physicists, computer scientists, mathematicians and engineers, Paderborn’s researchers are inching towards this goal as each day passes.